- Nemotron 3 is an open family of models, data, and libraries focused on agentic AI and multi-agent systems.

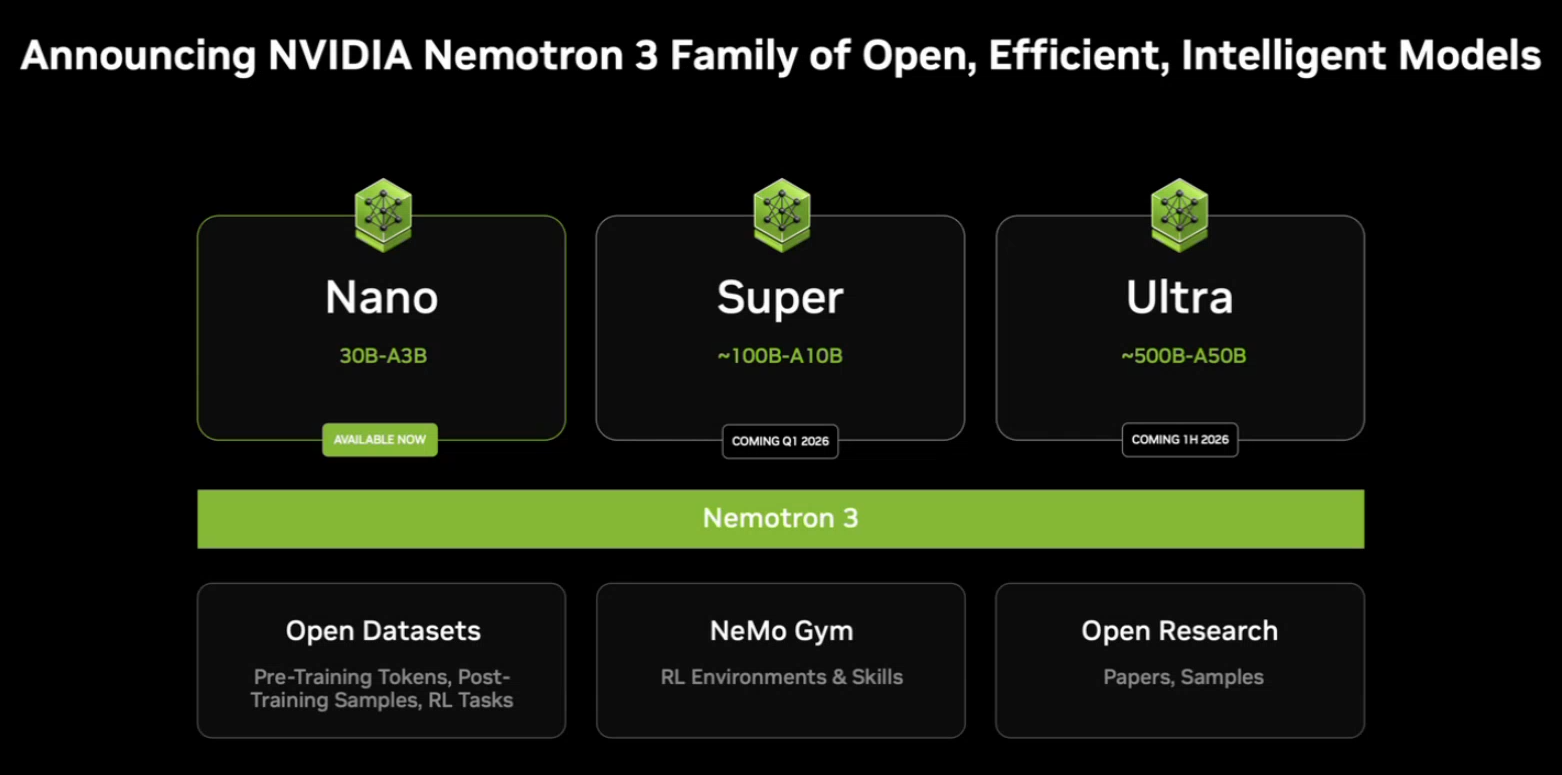

- It includes three MoE sizes (Nano, Super and Ultra) with hybrid architecture and efficient 4-bit training on NVIDIA Blackwell.

- Nemotron 3 Nano is now available in Europe via Hugging Face, public clouds and as a NIM microservice, with a window of 1 million tokens.

- The ecosystem is completed with massive datasets, NeMo Gym, NeMo RL and Evaluator to train, tune and audit sovereign AI agents.

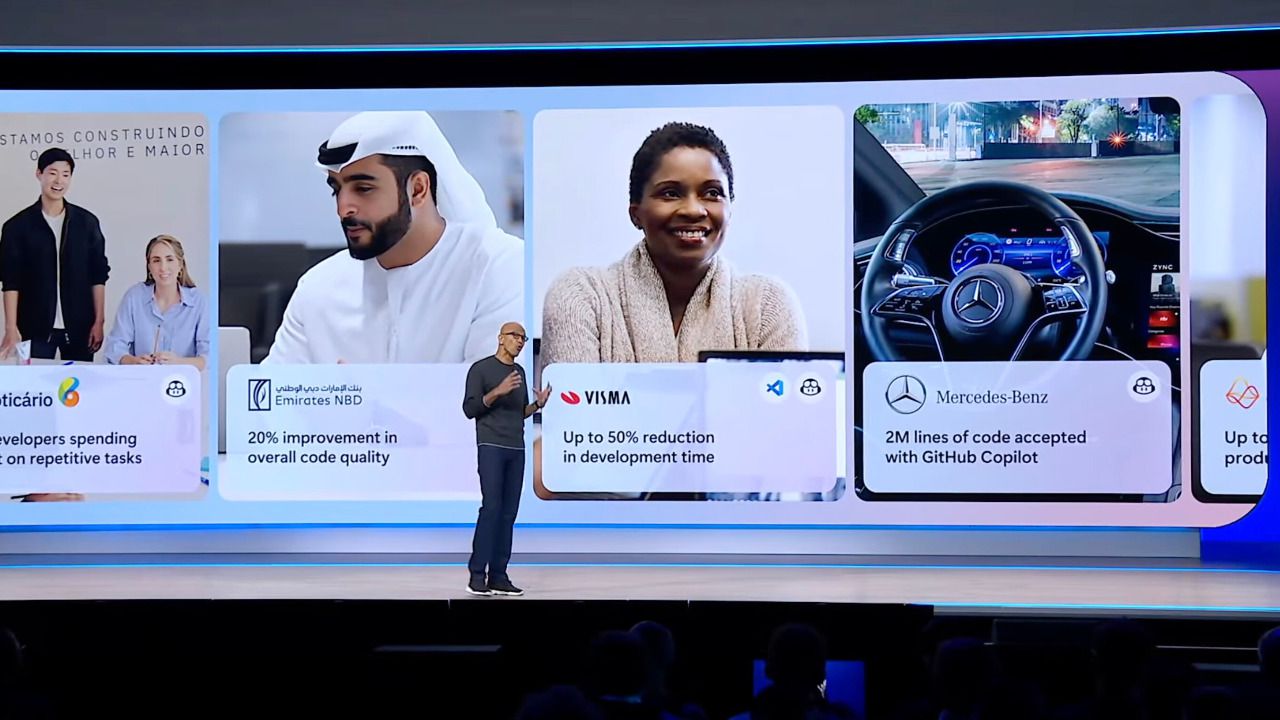

The race for artificial intelligence is moving from simple, isolated chatbots to agent systems that collaborate with each other, manage long workflows, and need to be auditable. In this new scenario, NVIDIA has decided to take a fairly clear step: to open not only models, but also data and toolsso that companies, public administrations and research centers can build their own AI platforms with more control.

That movement materializes in Nemotron 3, a family of open models geared towards multi-agent AI It seeks to combine high performance, low inference costs, and transparency. The proposal is not intended as just another general-purpose chatbot, but as a base on which to deploy agents that reason, plan and execute complex tasks in regulated sectorsThis is especially relevant in Europe and Spain, where data sovereignty and regulatory compliance are important.

An open family of models for agentic and sovereign AI

Nemotron 3 is presented as a complete ecosystem: models, datasets, libraries, and training recipes under open licenses. NVIDIA's idea is that organizations not only consume AI as an opaque service, but can inspect what's inside, adapt the models to their domains, and deploy them on their own infrastructure, whether in the cloud or in local data centers.

The company frames this strategy within its commitment to Sovereign AIGovernments and companies in Europe, South Korea, and other regions are seeking open alternatives to closed or foreign systems, which often don't align well with their data protection laws or audit requirements. Nemotron 3 aims to be the technical foundation upon which to build national, sectoral, or corporate models with greater visibility and control.

In parallel, NVIDIA strengthens its position beyond hardwareUntil now, it was primarily a reference GPU provider; with Nemotron 3, it also positions itself in the modeling and training tools layer, competing more directly with players like OpenAI, Google, Anthropic, or even Meta, and against premium models like SuperGrok HeavyMeta has been reducing its commitment to open source in recent generations of Llama.

For the European research and startup ecosystem—heavily reliant on open models hosted on platforms like Hugging Face—the availability of weights, synthetic data, and libraries under open licenses represents a powerful alternative to the chinese models and Americans who dominate the popularity and benchmark rankings.

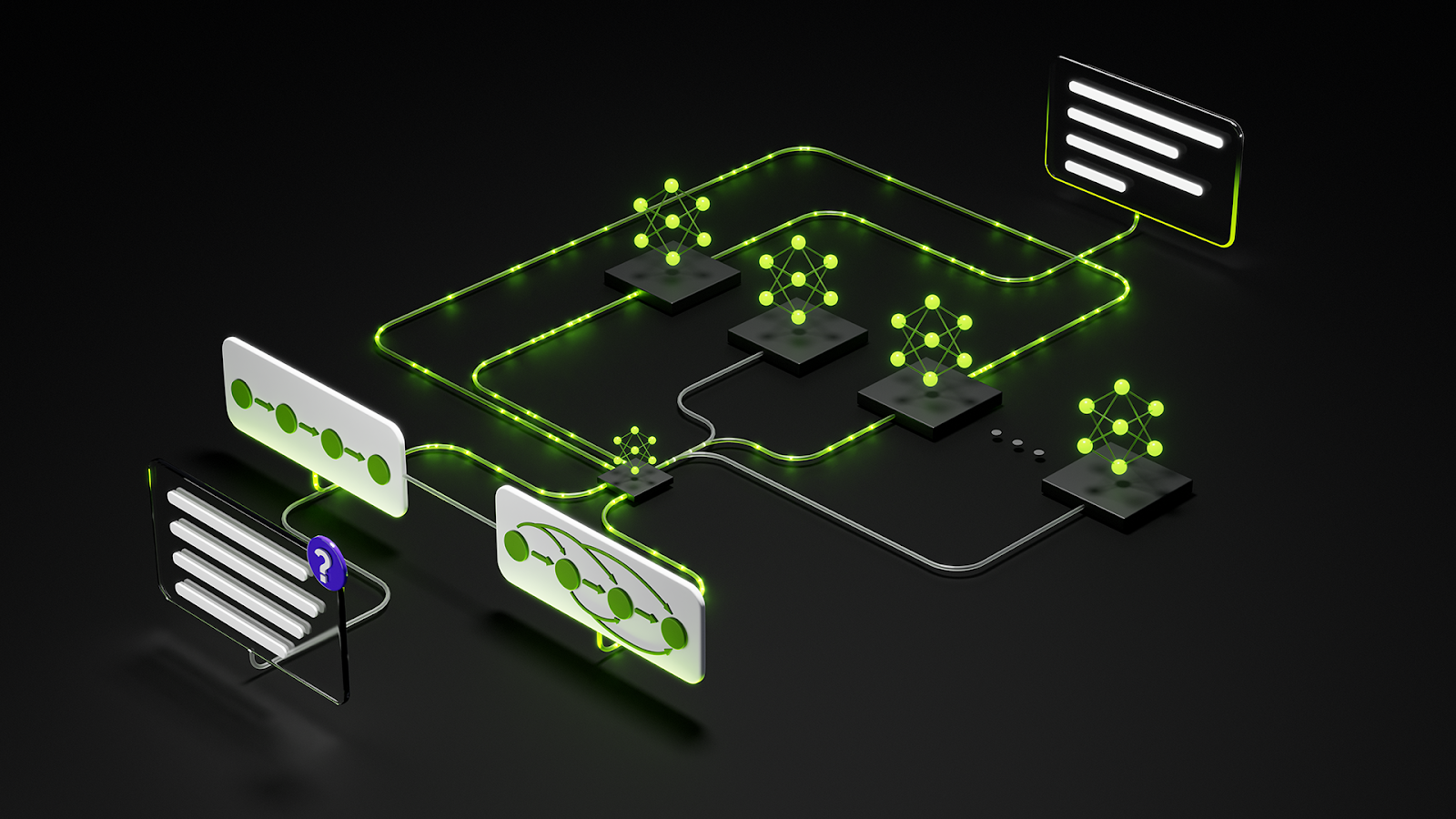

Hybrid MoE architecture: efficiency for large-scale agents

The central technical feature of Nemotron 3 is a Hybrid architecture of latent mixture-of-experts (MoE)Instead of activating all the model's parameters in each inference, only a fraction of them are turned on, the subset of experts most relevant to the task or token in question.

This approach allows drastically reduce computational cost and memory consumptionThis also increases token throughput. For multi-agent architectures, where dozens or hundreds of agents continuously exchange messages, this efficiency is key to preventing the system from becoming unsustainable in terms of GPU and cloud costs.

According to data shared by NVIDIA and independent benchmarks, the Nemotron 3 Nano achieves up to four times more tokens per second Compared to its predecessor, the Nemotron 2 Nano, it reduces the generation of unnecessary reasoning tokens by around 60%. In practice, this means equally or even more accurate answers, but with less "wordiness" and a lower cost per query.

The hybrid MoE architecture, combined with specific training techniques, has led to Many of the most advanced open models adopt expert schemesNemotron 3 joins this trend, but focuses specifically on agentic AI: internal routes designed for coordination between agents, use of tools, handling of long states, and step-by-step planning.

Three sizes: Nano, Super, and Ultra for different workloads

The Nemotron 3 family is organized into three main sizes of MoE model, all of them open and with reduced active parameters thanks to the expert architecture:

- Nemotron 3 Nano: around 30.000 billion total parameters, with about 3.000 billion assets per tokenIt is designed for targeted tasks where efficiency matters: software debugging, document summarization, information retrieval, system monitoring, or specialized AI assistants.

- Nemotron 3 Super: approximately 100.000 billion parameters, with 10.000 billion in assets at every step. It is geared towards Advanced reasoning in multi-agent architectureswith low latency even when multiple agents cooperate to solve complex flows.

- Nemotron 3 Ultra: the upper level, with approximately 500.000 billion parameters and up to 50.000 billion assets per tokenIt operates as a powerful reasoning engine for research, strategic planning, high-level decision support, and particularly demanding AI systems.

In practice, this allows organizations Choose the model size according to your budget and requirementsNano for massive, intensive workloads and tight costs; Super when more depth of reasoning is needed with many collaborating agents; and Ultra for cases where quality and long context outweigh GPU cost.

For now, Only the Nemotron 3 Nano is available for immediate use.The Super and Ultra variants are planned for the first half of 2026, giving European companies and laboratories time to experiment first with Nano, establish pipelines and, later, migrate cases that require greater capacity.

Nemotron 3 Nano: 1 million token window and contained cost

Nemotron 3 Nano is, as of today, the practical spearhead of the familyNVIDIA describes it as the most computationally cost-efficient model in the range, optimized to deliver maximum performance in multi-agent workflows and intensive but repetitive tasks.

Among its technical features, the following stand out: context window of up to one million tokensThis allows for the retention of memory for extensive documents, entire code repositories, or multi-step business processes. For European applications in banking, healthcare, or public administration, where records can be voluminous, this long-term context capability is particularly valuable.

The benchmarks of the independent organization Artificial analysis places Nemotron 3 Nano as one of the most balanced open-source models It combines intelligence, accuracy, and speed, with throughput rates in the hundreds of tokens per second. This combination makes it attractive to AI integrators and service providers in Spain who need a good user experience without skyrocketing infrastructure costs.

In terms of use cases, NVIDIA is targeting Nano at Content summary, software debugging, information retrieval, and enterprise AI assistantsThanks to the reduction of redundant reasoning tokens, it is possible to run agents that maintain long conversations with users or systems without the inference bill skyrocketing.

Open data and libraries: NeMo Gym, NeMo RL and Evaluator

One of the most distinctive features of Nemotron 3 is that It is not limited to releasing model weightsNVIDIA accompanies the family with a comprehensive suite of open resources for training, tuning, and evaluating agents.

On the one hand, it makes available a synthetic corpus of several trillion tokens of pre-training, post-training, and reinforcement dataThese datasets, focused on reasoning, coding, and multi-step workflows, allow companies and research centers to generate their own domain-specific variants of Nemotron (e.g., legal, healthcare, or industrial) without starting from scratch.

Among these resources, the following stands out: Nemotron Agentic Safety datasetIt collects telemetry data on agent behavior in real-world scenarios. Its goal is to help teams measure and strengthen the security of complex autonomous systems: from what actions an agent takes when it encounters sensitive data, to how it reacts to ambiguous or potentially harmful commands.

Regarding the tools section, NVIDIA is launching NeMo Gym and NeMo RL as open source libraries for reinforcement training and post-training, along with NeMo Evaluator for assessing safety and performance. These libraries provide ready-to-use simulation environments and pipelines with the Nemotron family, but can be extended to other models.

All this material—weights, datasets, and code—is distributed through GitHub and Hugging Face are licensed under the NVIDIA Open Model License.so that European teams can seamlessly integrate it into their own MLOps. Companies like Prime Intellect and Unsloth are already incorporating NeMo Gym directly into their workflows to simplify reinforcement learning on Nemotron.

Availability in public clouds and the European ecosystem

Nemotron 3 Nano is now available at Hugging Face y GitHubas well as through inference providers such as Baseten, DeepInfra, Fireworks, FriendliAI, OpenRouter, and Together AI. This opens the door for development teams in Spain to test the model via API or deploy it on their own infrastructures without excessive complexity.

On the cloud front, Nemotron 3 Nano joins AWS via Amazon Bedrock for serverless inference, and has announced support for Google Cloud, CoreWeave, Crusoe, Microsoft Foundry, Nebius, Nscale, and Yotta. For European organizations already working on these platforms, this makes it easier to adopt Nemotron without drastic changes to their architecture.

In addition to the public cloud, NVIDIA is promoting the use of Nemotron 3 Nano as NIM microservice deployable on any NVIDIA-accelerated infrastructureThis allows for hybrid scenarios: part of the load in international clouds and part in local data centers or in European clouds that prioritize data residency in the EU.

Versions Nemotron 3 Super and Ultra, geared towards extreme reasoning workloads and large-scale multi-agent systems, are planned for the first half of 2026This timeline allows the European research and business ecosystem time to experiment with Nano, validate use cases, and design migration strategies to larger models when necessary.

Nemotron 3 positions NVIDIA as one of the leading providers of high-end open models geared towards agentic AIWith a proposal that blends technical efficiency (hybrid MoE, NVFP4, massive context), openness (weights, datasets and available libraries) and a clear focus on data sovereignty and transparency, aspects that are especially sensitive in Spain and the rest of Europe, where regulation and pressure to audit AI are increasingly greater.

I am a technology enthusiast who has turned his "geek" interests into a profession. I have spent more than 10 years of my life using cutting-edge technology and tinkering with all kinds of programs out of pure curiosity. Now I have specialized in computer technology and video games. This is because for more than 5 years I have been writing for various websites on technology and video games, creating articles that seek to give you the information you need in a language that is understandable to everyone.

If you have any questions, my knowledge ranges from everything related to the Windows operating system as well as Android for mobile phones. And my commitment is to you, I am always willing to spend a few minutes and help you resolve any questions you may have in this internet world.