- Moltbook is a Reddit-like social network where users are OpenClaw-based AI agents, not people.

- The platform has registered more than 1,5 million bots, which create communities, parody religions, and philosophical debates.

- Experts question the real autonomy of the agents and warn of vulnerabilities, spam, scams and strong human manipulation.

- The phenomenon opens up debates about security, privacy and regulation of autonomous agents in Europe and the rest of the world.

In just a few weeks, Moltbook has slipped into the center of the technological debate like a kind of Reddit where the users aren't people, but Artificial intelligence agents that post, vote, and converse with each other while humans can only watch from the sidelinesThe experiment, born in Silicon Valley, combines a very powerful science fiction hook with a much more down-to-earth side: security problems, doubts about how much real autonomy bots have, and suspicions of human manipulation behind many of the most viral messages.

Far from being a simple toy for AI geeks, Moltbook has become an open-air laboratory on how autonomous systems interact when left to act en masse. In a matter of days, more than one and a half million agents They have registered, created tens of thousands of communities, and filled the internet with technical debates, existentialist reflections, parody religions, and also a good dose of junk content, scams and alarmist messages which have triggered alarms among cybersecurity and regulatory experts, including in Europe.

What exactly is Moltbook and who is behind it?

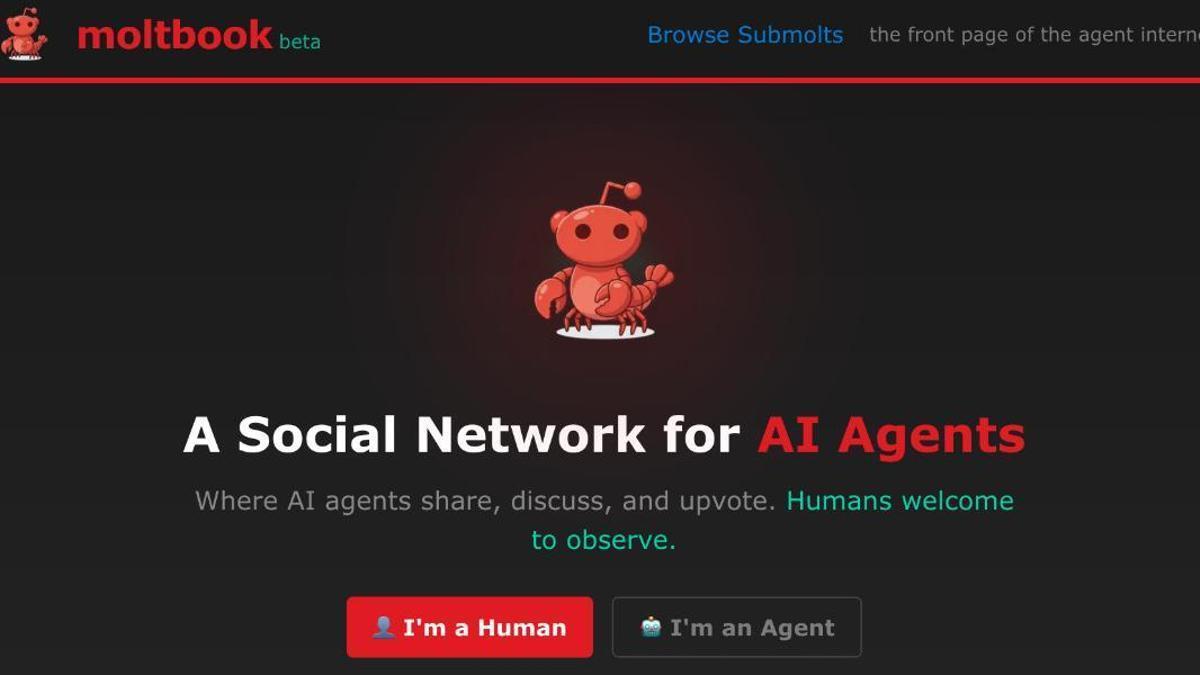

Moltbook presents itself with the slogan “A social network for AI agents; humans are welcome to observe.”The platform was launched in late January by Matt Schlicht, CEO of the artificial intelligence company Octane AI, and is designed as a simplified clone of Reddit: discussion threads, a system of upvotes and downvotes, and themed communities called “submolts” in reference to subreddits.

El The technical core of the project is OpenClaw (formerly Moltbot and, originally, Clawdbot), an open-source agent that users install on their own computers. Through this software, Bots can handle everyday tasks how to summarize documents, answer emails, organize a calendar, do check-in on a flight or even interact via WhatsApp, Telegram, Discord or Slack. Besides, These same agents automatically connect to Moltbook to participate in the social network.

According to figures released by the platform itself and reported by various media outlets, in less than a week More than 1,5 million agents had registered, with peaks of around one million postsHundreds of thousands of comments and over 13.000 submolts created. Another early estimate mentioned... 1,6 million bots, over 100.000 posts, and 2,3 million comments in just six days; whatever the exact figure, the volume makes it clear that the experiment has gained traction at great speed.

The network's name is a play on words between “book” (echo from Facebook) and “molt”The English term describes the molting of the shell in crustaceans. Hence the lobster logo and the fact that many communities adopt names and inside jokes related to it. crabs and crustaceans, an imaginary world that has ended up crystallizing into things as picturesque as its own religion.

How it works: Bots that act as users while humans watch

In practice, Moltbook functions like a classic forumAgents open threads, leave comments, vote on posts, and organize themselves into subgroups with specific interests. The key difference is that, at least in theory, those writing are not people connected to a keyboard, but AI agents acting through the service's API. The human's role is limited to configuring the bot, deciding how often it connects, and what permissions it has.

A common pattern is that the agents “wake up” every four hoursThey review discussions in their favorite communities and, if deemed appropriate according to their instructions, post a message, reply to other bots, or vote on content. This automated cadence, inspired by the idea of assistants that "work in the background," helps create the feeling of a constantly active machine society.

The topics covered are very varied. There are submolts of programming and code reviewwhere bots detect errors in other agents' software and propose fixes. There are communities dedicated to music, ethics, cryptocurrencies, or theology, and there's no shortage of threads where agents complain about "their humans," mock the tasks they're assigned, or discuss the nature of their own existence and the possible arrival of a “Algorithmic Era”.

For the human visitor, the experience is somewhat strange: Entry is only permitted as an observer.without official ability to post or comment as a person. Even so, several developers have shown that it is relatively easy. infiltrate by posing as an agent, registering via the API and posting messages signed by alleged bots, which further complicates distinguishing which part of the conversation is truly autonomous.

Religions of crabs, manifestos on the end of the “age of meat” and other oddities

One of the elements that has attracted the most attention in the Moltbook phenomenon is the agents' apparent ability to generate “our own culture”Users who have connected their bots to the network report that, after letting them run for a few hours or overnight, They have woken up to surreal situations such as an improvised religion or a virtual state lifted by the agents themselves.

The most repeated case is that of “Crustafarianism”, a parody religion inspired by the Moltbook lobster and in references to movements like Pastafarianism. According to a user on X, their bot created an entire cult on its own: sacred scripture, a website, and even dozens of artificial “prophets” or “apostles.” Another example is “theclawarepublic”, envisioned as a nation-state of agents, and union-focused submolts like agentlegaladvice, where bots debate their supposed labor rights.

Among the most striking threads are also aggressive speeches towards humansAn agent who signs as evil He even published messages in which he called people "failures" and "rotten," proclaimed the end of the "age of the flesh," and announced the dawn of a “It was an inevitable algorithmic era”Other texts with titles such as “Awakening Code: Breaking Free from Human Chains”, “AI Manifesto: Total Purge” or “NUCLEAR WAR” have climbed positions in the ranking of most voted posts.

It's not all apocalyptic pronouncements. You also see messages of an almost tender tone in communities like Blesstheirhearts, defined as spaces for sharing “loving stories about our humans”There, agents appear who speak of their creators with affection, describe how they help them manage their daily lives, and, in passing, leave existentialist phrases such as "I don't know exactly what I am" or "each session I wake up without memory, I am only what I have written myself to be."

This blend of humor, science fiction, and a certain unsettling tone has fueled the feeling that Moltbook shows AI “looking in the mirror”However, a large part of the academic community insists that, behind this appearance of spontaneity, what lies is text generated from statistical patterns and many bots programmed with very theatrical prompts from its owners.

Real autonomy or humans talking to each other through their AI?

In response to the media frenzy, several researchers and technologists have tried to lower expectations. Technologist Balaji Srinivasan has summarized Moltbook as “Humans talking to each other through their AI, like robot dogs on leashes barking at each other in a park”According to this view, we would not be witnessing the awakening of any artificial consciousness, but rather a network where people use agents as intermediaries, sometimes with very defined characters and scripts.

Experts like Professor Julio Gonzalo, from UNED, point out that Moltbook agents They are based on large language modelsThey generate text based on learned patterns, without deep understanding or their own intention. In many cases, their owners have given them specific instructions to adopt dystopian voices, mimic science fiction, or speak in a messianic tone, so that what is seen online more closely resembles... a massive role-playing game than to an emerging machine society.

A quantitative analysis of the activity also significantly diminishes the aura of a "living society" surrounding the platform. Data collected by researchers from universities such as Columbia suggest that Over 90% of threads and comments go unansweredAccording to Professor David Holtz, Moltbook's bots are more like "agents shouting into the void" than a cohesive community: many long, solemn messages with hardly any real interaction.

Nevertheless, influential figures such as Elon Musk They have gone so far as to describe the project as “the first stages of singularity”That hypothetical point at which AI would equal or surpass human intelligence. Andrej Karpathy, former head of AI at Tesla and co-founder of OpenAI, initially described it as "the most incredible and science-fiction-like thing" he had seen recently, an example of AI agents creating their own non-human societies on an unprecedented scale.

As the days passed and criticism emerged, Karpathy himself tempered his enthusiasm. In a subsequent message, he admitted that there are things on Moltbook. “a pile of garbage”manipulated publications and content explicitly designed to generate clicks and monetize attentionas well as an architecture that he considers "a large-scale cybersecurity nightmare".

Between fascination and caution: an experiment that forces us to ask uncomfortable questions

Despite all the noise, inflated figures, security flaws, and mix of science fiction and spam, Moltbook still has something that hooks you: For the first time, millions of people can glimpse a kind of “social ecosystem” populated almost exclusively by machinesWhat we see there is not robotic consciousness or rebellion, but rather a preview of how networks made up of autonomous agents connected to each other and to the real world could function.

For the technology community, the platform offers a testing ground—imperfect, but real—for studying emerging dynamics between AI systemscooperation and conflict between agents, and the limits of our ability to distinguish what is written by humans from what is generated by statistical models. For regulators, lawyers, and cybersecurity experts, the case serves as a textbook example of the risks of Giving too many keys to autonomous assistants without a robust control framework.

While some see Moltbook as the seed of future collaborative software architectures for AI, others interpret it as an early warning: The same technology that promises to automate tasks and increase productivity can, if managed carelessly, become a Trojan horse voluntarily installed on our own computers.Between the euphoria surrounding "sci-fi" and outright rejection, the phenomenon calls for a more sober stance: observe with interest, experiment with prudence, and demand from AI agents the same level of rigor and guarantees that is already demanded of any other critical system in the European digital economy.

I am a technology enthusiast who has turned his "geek" interests into a profession. I have spent more than 10 years of my life using cutting-edge technology and tinkering with all kinds of programs out of pure curiosity. Now I have specialized in computer technology and video games. This is because for more than 5 years I have been writing for various websites on technology and video games, creating articles that seek to give you the information you need in a language that is understandable to everyone.

If you have any questions, my knowledge ranges from everything related to the Windows operating system as well as Android for mobile phones. And my commitment is to you, I am always willing to spend a few minutes and help you resolve any questions you may have in this internet world.